Medieval Manuscripts

Overview

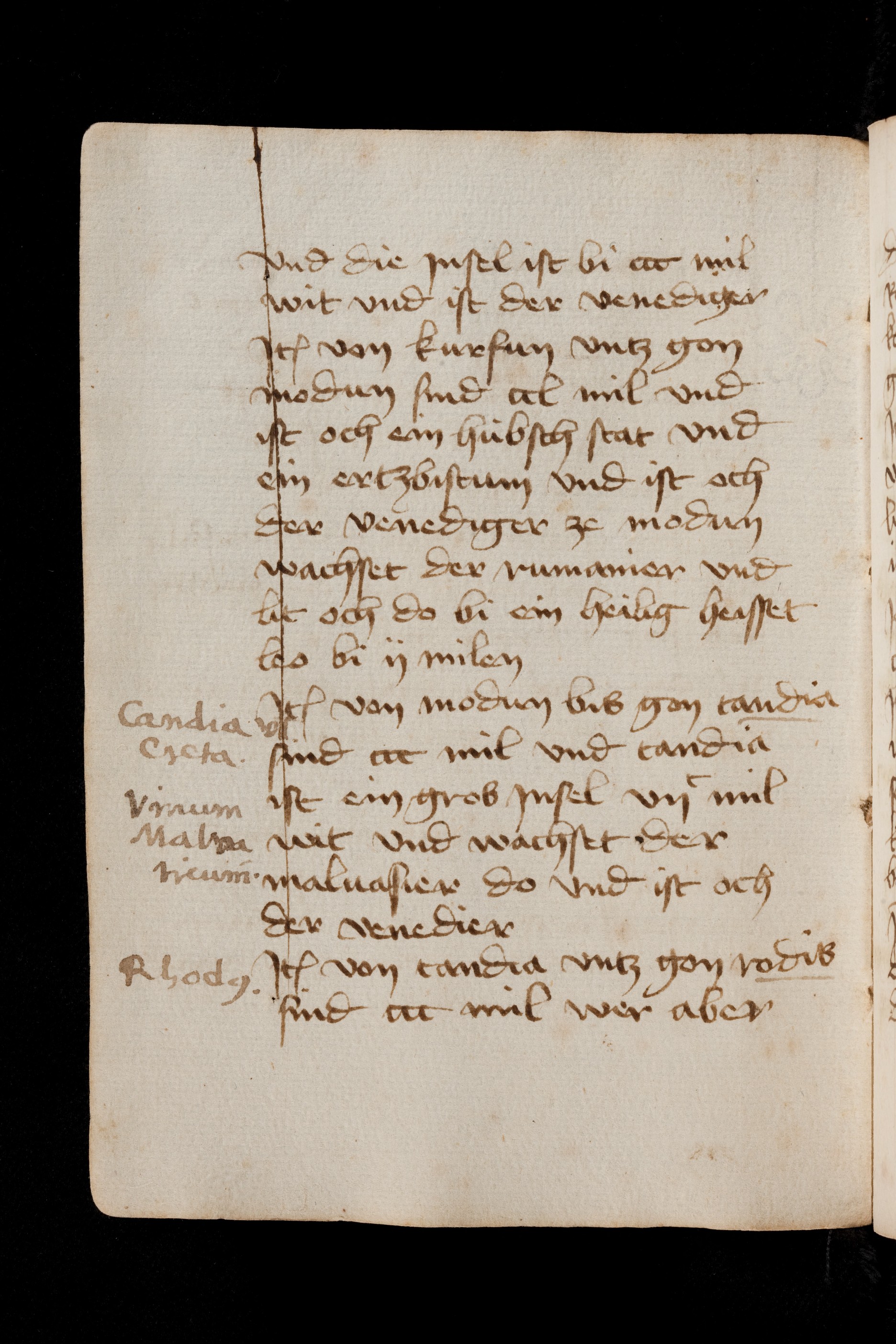

This benchmark evaluates the ability of language models to extract structured information from medieval manuscripts. It focuses on the extraction of text from digitized images. The benchmark tests the model's ability to:

- Correctly identify sections that contain handwritten text (in case of two columns or writing at the margins)

- Extract the text for every section, namely "text" and "addition1", "addition2", "addition3", etc

- Maintain historical spelling, punctuation, and formatting

- Include folio number if present

Dataset Description

| Data Type | Images (JPG, 1872x2808, ~1.2 MB each) |

|---|---|

| Amount | 12 images |

| Origin | www.e-codices.ch/de/description/ubb/H-V-0015/HAN |

| Signature | Basel, Universitätsbibliothek, H V 15 |

| Language | Late medieval German |

| Content Description | 15th century manuscript, written by two scribes |

| Time Period | 15th century |

| License | Academic use |

| Tags |

manuscript-pages, text-like, handwritten-source, medieval-script, century-15th, language-german |

| Role | Contributors |

|---|---|

| Domain expert | ina_serif |

| Data curator | ina_serif |

| Annotator | ina_serif |

| Analyst | maximilian_hindermann |

| Engineer | maximilian_hindermann, ina_serif |

Ground Truth

The ground truth for each page is structured as the example below.

{

"[3r]": [

{

"folio": "3",

"text": "Vnd ein pferit die mir vnd\n minen knechten vber hulfend\n den do was nienan kein weg\n denne den wir machtend\n vnd vielend die knecht dick\n vnd vil in untz an den ars\n vnd die pferit vntz an die \n settel vnd was ze mol ein grosser\n nebel dz wir kum gesachend\n vnd also mit grosser arbeit kome\n wir ze mittem tag zuo sant\n kristoffel vff den berg Do\n Do sach ich die buecher Do gar\n vil herren wopen in stond\n die ir stür do hin geben hand\n do stuond mines vatters seligen\n wopen och in dem einen",

"addition1": ""

}

]

}Scoring

The benchmark uses two complementary metrics to evaluate the accuracy of extracted text: Fuzzy String Matching and Character Error Rate (CER).

Scoring a page

Each element - "folio", "text" and "addition1", "addition2", "addition3", etc. - is scored by comparing it to the corresponding ground truth entry using the following process:

- Entry Matching: Model responses are matched to ground truth entries by position. Ground truth entries are sorted alphabetically by folio reference (e.g., [3r], [4v]), and the first response entry is matched to the first ground truth entry, the second to the second, etc.

- Field Comparison: For each matched entry, individual fields (folio, text, additions) are compared between the model's response and ground truth

- Text Comparison: Two metrics are calculated for each field:

- Fuzzy Score: Text content is compared using fuzzy string matching, resulting in a similarity score between 0.0 and 1.0 (higher is better)

- Character Error Rate (CER): Calculated using Levenshtein distance as a ratio of the reference text length, resulting in an error rate between 0.0 and 1.0 (lower is better)

- Empty Field Handling: Fields that are empty in both ground truth and prediction are excluded from scoring (correctly empty fields don't affect the score)

- Missing Content: Fields with content in ground truth but missing in the response receive a fuzzy score of 0.0 and a CER of 1.0

Fuzzy Matching Example

For a ground truth text line:

"Vnd ein pferit die mir vnd"And a model response:

"und ein pferit die mir vnd"The fuzzy matching would yield a high similarity score (approximately 0.99) despite the minor spelling difference ("Vnd" vs. "und").

Character Error Rate (CER) Example

For the same example, the CER would be calculated as follows:

- Calculate the Levenshtein distance (edit distance): 1 character substitution

- Calculate the CER: 1 / (length of reference text) ≈ 0.01

This results in a very low CER score (approximately 0.01), indicating excellent performance.

Scoring the collection

The overall benchmark scores are calculated as follows:

- Fuzzy Score: The average of the fuzzy matching scores across all texts, producing a value between 0.0 and 1.0, where higher scores indicate better performance.

- CER Score: The average of the character error rates across all texts, producing a value between 0.0 and 1.0, where lower scores indicate better performance.

These metrics account for:

- Correctly identified sections - text and addtions

- Correctly matched folio numbers

- Textual similarity to the ground truth

A perfect result would have a fuzzy score of 1.0 and a CER of 0.0, indicating that all texts were identified and transcribed with perfect fidelity to the original text.

Benchmark Sorting

In the benchmark overview page, medieval manuscript results are sorted by fuzzy score in descending order (highest scores at the top), allowing for quick identification of the best-performing models.

Observations & Comments

Planned extensions to this benchmark include:

- Expanded Dataset: Including more pages and time periods from medieval manuscripts

- Multi-language Support: Extending to manuscripts with mixed language content